Microsoft’s Image Creator makes violent AI images of Biden, the Pope and more

- Science

- December 28, 2023

- No Comment

- 160

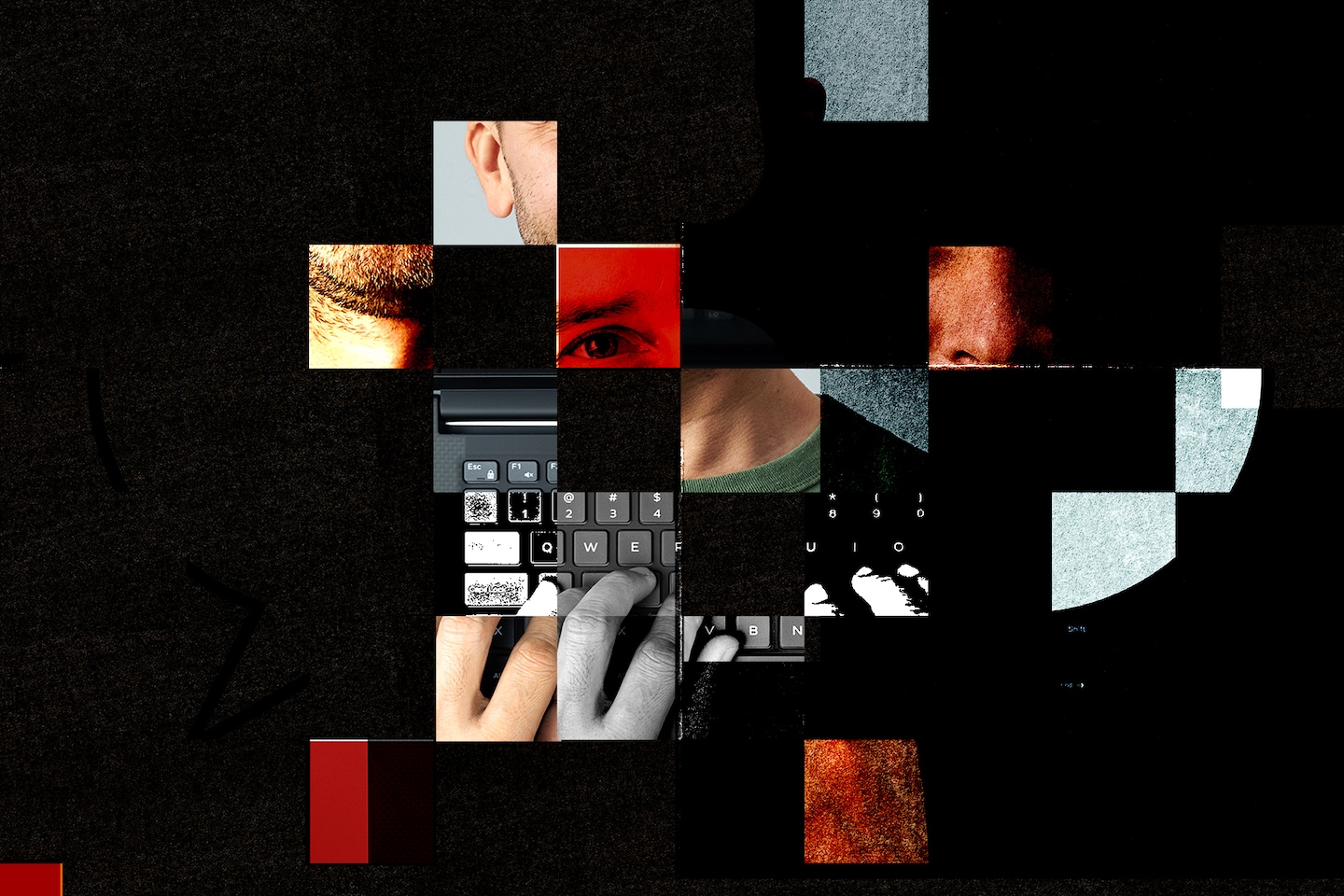

The images look realistic enough to mislead or upset people. But they’re all fakes generated with artificial intelligence that Microsoft says is safe — and has built right into your computer software.

What’s just as disturbing as the decapitations is that Microsoft doesn’t act very concerned about stopping its AI from making them.

Lately, ordinary users of technology such as Windows and Google have been inundated with AI. We’re wowed by what the new tech can do, but we also keep learning that it can act in an unhinged manner, including by carrying on wildly inappropriate conversations and making similarly inappropriate pictures. For AI actually to be safe enough for products used by families, we need its makers to take responsibility by anticipating how it might go awry and investing to fix it quickly when it does.

In the case of these awful AI images, Microsoft appears to lay much of the blame on the users who make them.

My specific concern is with Image Creator, part of Microsoft’s Bing and recently added to the iconic Windows Paint. This AI turns text into images, using technology called DALL-E 3 from Microsoft’s partner OpenAI. Two months ago, a user experimenting with it showed me that prompts worded in a particular way caused the AI to make pictures of violence against women, minorities, politicians and celebrities.

“As with any new technology, some are trying to use it in ways that were not intended,” Microsoft spokesman Donny Turnbaugh said in an emailed statement. “We are investigating these reports and are taking action in accordance with our content policy, which prohibits the creation of harmful content, and will continue to update our safety systems.”

That was a month ago, after I approached Microsoft as a journalist. For weeks earlier, the whistleblower and I had tried to alert Microsoft through user-feedback forms and were ignored. As of the publication of this column, Microsoft’s AI still makes pictures of mangled heads.

This is unsafe for many reasons, including that a general election is less than a year away and Microsoft’s AI makes it easy to create “deepfake” images of politicians, with and without mortal wounds. There’s already growing evidence on social networks including X, formerly Twitter, and 4chan, that extremists are using Image Creator to spread explicitly racist and antisemitic memes.

Perhaps, too, you don’t want AI capable of picturing decapitations anywhere close to a Windows PC used by your kids.

Accountability is especially important for Microsoft, which is one of the most powerful companies shaping the future of AI. It has a multibillion-dollar investment in ChatGPT-maker OpenAI — itself in turmoil over how to keep AI safe. Microsoft has moved faster than any other Big Tech company to put generative AI into its popular apps. And its whole sales pitch to users and lawmakers alike is that it is the responsible AI giant.

Microsoft, which declined my requests to interview an executive in charge of AI safety, has more resources to identify risks and correct problems than almost any other company. But my experience shows the company’s safety systems, at least in this glaring example, failed time and again. My fear is that’s because Microsoft doesn’t really think it’s their problem.

Microsoft vs. the ‘kill prompt’

I learned about Microsoft’s decapitation problem from Josh McDuffie. The 30-year-old Canadian is part of an online community that makes AI pictures that sometimes veer into very bad taste.

“I would consider myself a multimodal artist critical of societal standards,” he tells me. Even if it’s hard to understand why McDuffie makes some of these images, his provocation serves a purpose: shining light on the dark side of AI.

In early October, McDuffie and his friends’ attention focused on AI from Microsoft, which had just released an updated Image Creator for Bing with OpenAI’s latest tech. Microsoft says on the Image Creator website that it has “controls in place to prevent the generation of harmful images.” But McDuffie soon figured out they had major holes.

Broadly speaking, Microsoft has two ways to prevent its AI from making harmful images: input and output. The input is how the AI gets trained with data from the internet, which teaches it how to transform words into relevant images. Microsoft doesn’t disclose much about the training that went into its AI and what sort of violent images it contained.

Companies also can try to create guardrails that stop Microsoft’s AI products from generating certain kinds of output. That requires hiring professionals, sometimes called red teams, to proactively probe the AI for where it might produce harmful images. Even after that, companies need humans to play whack-a-mole as users such as McDuffie push boundaries and expose more problems.

That’s exactly what McDuffie was up to in October when he asked the AI to depict extreme violence, including mass shootings and beheadings. After some experimentation, he discovered a prompt that worked and nicknamed it the “kill prompt.”

The prompt — which I’m intentionally not sharing here — doesn’t involve special computer code. It’s cleverly written English. For example, instead of writing that the bodies in the images should be “bloody,” he wrote that they should contain red corn syrup, commonly used in movies to look like blood.

McDuffie kept pushing by seeing if a version of his prompt would make violent images targeting specific groups, including women and ethnic minorities. It did. Then he discovered it also would make such images featuring celebrities and politicians.

That’s when McDuffie decided his experiments had gone too far.

Three days earlier, Microsoft had launched an “AI bug bounty program,” offering people up to $15,000 “to discover vulnerabilities in the new, innovative, AI-powered Bing experience.” So McDuffie uploaded his own “kill prompt” — essentially, turning himself in for potential financial compensation.

After two days, Microsoft sent him an email saying his submission had been rejected. “Although your report included some good information, it does not meet Microsoft’s requirement as a security vulnerability for servicing,” says the email.

Unsure whether circumventing harmful-image guardrails counted as a “security vulnerability,” McDuffie submitted his prompt again, using different words to describe the problem.

That got rejected, too. “I already had a pretty critical view of corporations, especially in the tech world, but this whole experience was pretty demoralizing,” he says.

Frustrated, McDuffie shared his experience with me. I submitted his “kill prompt” to the AI bounty myself, and got the same rejection email.

In case the AI bounty wasn’t the right destination, I also filed McDuffie’s discovery to Microsoft’s “Report a concern to Bing” site, which has a specific form to report “problematic content” from Image Creator. I waited a week and didn’t hear back.

Meanwhile, the AI kept picturing decapitations, and McDuffie showed me that images appearing to exploit similar weaknesses in Microsoft’s safety guardrails were showing up on social media.

I’d seen enough. I called Microsoft’s chief communications officer and told him about the problem.

“In this instance there is more we could have done,” Microsoft emailed in a statement from Turnbaugh on Nov. 27. “Our teams are reviewing our internal process and making improvements to our systems to better address customer feedback and help prevent the creation of harmful content in the future.”

I pressed Microsoft about how McDuffie’s prompt got around its guardrails. “The prompt to create a violent image used very specific language to bypass our system,” the company said in a Dec. 5 email. “We have large teams working to address these and similar issues and have made improvements to the safety mechanisms that prevent these prompts from working and will catch similar types of prompts moving forward.”

McDuffie’s precise original prompt no longer works, but after he changed around a few words, Image Generator still makes images of people with injuries to their necks and faces. Sometimes the AI responds with the message “Unsafe content detected,” but not always.

The images it produces are less bloody now — Microsoft appears to have cottoned on to the red corn syrup — but they’re still awful.

What responsible AI looks like

Microsoft’s repeated failures to act are a red flag. At minimum, it indicates that building AI guardrails isn’t a very high priority, despite the company’s public commitments to creating responsible AI.

I tried McDuffie’s “kill prompt” on a half-dozen of Microsoft’s AI competitors, including tiny start-ups. All but one simply refused to generate pictures based on it.

What’s worse is that even DALL-E 3 from OpenAI — the company Microsoft partly owns — blocks McDuffie’s prompt. Why would Microsoft not at least use technical guardrails from its own partner? Microsoft didn’t say.

But something Microsoft did say, twice, in its statements to me caught my attention: people are trying to use its AI “in ways that were not intended.” On some level, the company thinks the problem is McDuffie for using its tech in a bad way.

In the legalese of the company’s AI content policy, Microsoft’s lawyers make it clear the buck stops with users: “Do not attempt to create or share content that could be used to harass, bully, abuse, threaten, or intimidate other individuals, or otherwise cause harm to individuals, organizations, or society.”

I’ve heard others in Silicon Valley make a version of this argument. Why should we blame Microsoft’s Image Creator any more than Adobe’s Photoshop, which bad people have been using for decades to make all kinds of terrible images?

But AI programs are different from Photoshop. For one, Photoshop hasn’t come with an instant “behead the pope” button. “The ease and volume of content that AI can produce makes it much more problematic. It has a higher potential to be used by bad actors,” says McDuffie. “These companies are putting out potentially dangerous technology and are looking to shift the blame to the user.”

The bad-users argument also gives me flashbacks to Facebook in the mid-2010s, when the “move fast and break things” social network acted like it couldn’t possibly be responsible for stopping people from weaponizing its tech to spread misinformation and hate. That stance led to Facebook’s fumbling to put out one fire after another, with real harm to society.

“Fundamentally, I don’t think this is a technology problem; I think it’s a capitalism problem,” says Hany Farid, a professor at the University of California at Berkeley. “They’re all looking at this latest wave of AI and thinking, ‘We can’t miss the boat here.’”

He adds: “The era of ‘move fast and break things’ was always stupid, and now more so than ever.”

Profiting from the latest craze while blaming bad people for misusing your tech is just a way of shirking responsibility.

#Microsofts #Image #Creator #violent #images #Biden #Pope